"I am not shocked that we are between level 1 and 2. After all, it is a new subject."

A new maturity model: Thinking about responsible use of AI

Responsible handling of AI starts with thinking about ethical issues. Eleven educational institutions, together with SURF, EUR and EDSA, tested how far they are in this process. Although the use of AI in education and research is still in its infancy, there is already a model that helps institutions organise AI ethics.

The European Union's AI regulation is here and the first obligations will come into force in February 2025. This new legislation is presented as the world's first comprehensive AI law. Unlike the introduction of the GDPR, institutions now want to anticipate the upcoming changes and obligations.

In a pilot, 11 MBOs, universities of applied sciences and universities tested their maturity in AI ethics through the AI Ethics Maturity Model. This model was developed by Joris Krijger and Tamara Thuis, both PhD researchers at Erasmus University Rotterdam (EUR).

Given the recent rise of AI, it is no surprise that institutions are still at the beginning of their ethical maturity with regard to this disruptive technology. In 2022, we were massively confronted with the unimaginable possibilities of AI.

Understanding where you stand

"I am not shocked that we are between level 1 and 2," says Wout van Wijngaarden, CIO at EUR. "After all, it is a new topic. That the model gives insight into where you stand and supports you with tooling to grow further, I think is great. I see the AI Ethics Maturity Model as a tool that will really help us move forward."

René van den Berg (ROC van Amsterdam-Flevoland)

The ROC van Amsterdam-Flevoland also took part in the pilot, because they too see that a huge amount is going to come down to education and research. "We are a large MBO and VO (secondary education) institution," says René van den Berg, director of Education, Information and IT, "but even we can't do it alone anymore.

AI has a lot of impact on digital transformation for our staff, students and pupils. Are we ready to use the technology properly? Awareness must now permeate all layers. We need to ask ourselves the question: What do we want and don't want, and where do we draw the line?"

In a practice session almost a year ago, René, together with board members of an MBO college, created a teaching programme using AI, including self-learning teaching forms.

"The model provides structure and inspires. With this framework, you can exchange best practices among yourselves very well."

"Everyone was amazed by the possibilities and the speed," René says. He believes that transparency is needed on issues such as:

What do you do with the data of students, pupils and staff using AI? What does AI mean for our examinations? What does AI mean for the personalisation of learning?

Joris Krijger (Erasmus University Rotterdam)

Sharing experiences and knowledge

As creator of the maturity model, Joris Krijger sees that many people within organisations are already working on the subject. "The pilot has strengthened cooperation. What we see at all the institutions that have participated is that the model brings together people who are already doing something in this area."

The effect is not only noticeable within institutions; the AI Ethics Maturity Model also helps institutions exchange experiences and knowledge mutually. "The model gives structure and inspires," says Jan van den Berg, FG at the Amsterdam University of Applied Sciences (HvA) and supervisor of the pilot. "With this framework, you can exchange best practices among yourselves very well."

Wout sees even broader possibilities: "It delivers a lot that you can work through together as a sector within this framework. Using maturity models to evaluate and improve is a good development for the education sector."

"These kinds of topics are such big developments for individual institutions, you have to do that together."

René adds: "We make a journey together. We look at each other's considerations and see how others implement such a model. These kinds of topics are such big developments for individual institutions, you have to do them together."

Ethics versus ethical action

It is important to note that the model indicates how well you as an organisation can deal with ethical dilemmas around AI, not whether you are ethical or acting ethically.

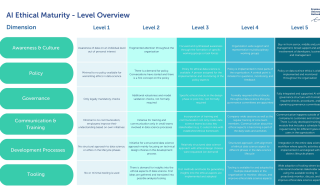

Joris explains, "The AI Ethics Maturity Model is the result of 15 sessions with different organisations on how they deal with ethical dilemmas around AI. From these, six dimensions emerged; organisational preconditions you need to have in place as an organisation to be able to deal with AI ethics in a meaningful way. Within these, you can grow – or drop – between levels 1 to 5."

The dimensions are all aspects of an organisation: Awareness and culture, policy, governance, communication and training, development processes and tooling. At level 1, everything is still fragmented and at level 5, thinking about ethical considerations when deploying AI is business as usual.

The AI Ethics Maturity Model

Setting priorities

Underlying the Maturity Model is Joris' fascination with how technology affects us, and how we affect technology. "These dynamics are often very subtle and invisible, but they do affect how we look at each other and the world, and how we interact with each other. Ethical issues I consider are the most interesting issues out there, because they are about the direction we take as a society. With AI, one factor is that we are going to outsource decisions to systems, and if they don't know what we think is important, those systems are not going to act accordingly."

"If you really want to change how you deal with AI, you also need to think about what you think is important as an organisation, and make sure this is reflected in your procurement and development processes around AI."

"By looking in a structured way at where you are as an organisation and where you think you should be, priorities emerge," Joris notes. "Institutions can work on those. After six months or a year, when those priorities have been picked up, you take the model out again and see what the next steps are to grow.

If you really want to change how you deal with AI, you not only have to start using the technology differently, but you also have to think about what you consider is important as an organisation, and make sure this is reflected in your procurement and development processes around AI."

Jan van den Berg (University of applied sciences)

AI ethics as strategic steering

Partly for this reason, the HvA took an organisation-wide approach to participating in the pilot. That's not necessary, by the way; a quick test in half a day also provides valuable insights into where you stand.

"But," Jan continues, "if you want results from the maturity model to have organisational meaning, you have to do the test with the involvement of the Executive Board." On behalf of the Executive Board, he organised expert sessions. He then examined the outcomes and elaborated on them in a plenary session. "A plenary session helps to increase organisational support. If you want to set this up properly, you have to incorporate it professionally into your operations to avoid getting stuck in an ad hoc way of working that is frustrating for everyone."

Wout van Wijngaarden (Erasmus University Rotterdam)

Wout clarifies that it is necessary for the BoE to mandate the development of an AI strategy in line with the organisational strategy. "Don't use AI ethics as an outboard engine, but incorporate it into the strategic steering."

Imparting values

"Above all, make it fun," is Jan's advice. "Encourage critical thinking. During an expert session on the AI Act, Hans de Zwart, researcher and lecturer at the HvA, introduced the concept of moral imagination as a way of thinking about the unexpected consequences of technology. The idea is that strength is something you can train, develop, and deploy in determining your own position in dealing with AI. So practice! Reasoning from ethics gets your imagination working."

Text: Maureen van Althuis

Download

'A new maturity model: thinking about responsible AI use' is an article from SURF Magazine.

Back to SURF Magazine

Questions following this article? Mail to magazine@surf.nl.