High-Performance Machine Learning: efficient and scalable machine learning in HPC environments

Gather your insights quicker by efficiently leveraging complex machine learning algorithms on high-performance computing hardware. We offer a powerful service that can assist in scaling out ML model training to the large-scale Dutch national infrastructure. We also help to enhance your numerical simulations with machine learning techniques.

Efficient use of Machine/Deep Learning frameworks

With the advances of deep learning techniques in the past decade, we are witnessing a growing interest from various research groups in employing machine learning into their processes. Due to the compute-hungry nature of deep learning training workloads, it is of high importance for researchers to work with efficient, system-optimized installations of complex, high-level frameworks such as TensorFlow, Keras, or PyTorch. Furthermore, since SURF operates heterogeneous systems, both CPU-optimized and GPU-optimized frameworks are supported.

Scaling Deep Learning workloads

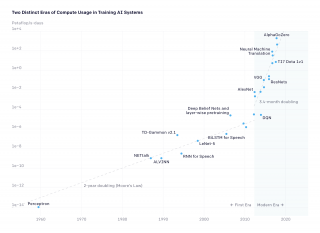

Deep neural network training of high-fidelity models is already an Exascale task. In fact, some of the largest models proposed such as OpenAI’s GPT-3 or DeepMind’s AlphaGo require zettaFLOPs of compute to train. And these computational requirements keep increasing at a pace much higher than Moore’s law, as presented in Figure 1. As the datasets used for training grow, models become more accurate at the expense of even longer training times. The way forward is to efficiently use HPC resources to perform distributed training, and gradually tackle the compute, communication, and I/O challenges that might arise. SURF’s High-Performance Machine Learning (HPML) team has extensive experience in scaling out deep learning workloads for:

On both CPU-based and GPU-based supercomputers, using TensorFlow, Keras, or PyTorch.

AI and compute (courtesy OpenAI)

Applying Deep Learning to Science & Engineering

When getting closer to the end of Moore’s law, scientific and engineering applications that typically rely on numerical simulations are encountering difficulties in addressing larger and larger problems. Recently, scientists are exploring deep learning techniques to increase the understanding of complex systems, systems that are traditionally solved with large-scale numerical simulations on HPC systems. We have extracted some guidelines from a number of experiments that we have undertaken in various scientific domains over the last 2 years, in close collaboration with the SURF Open Innovation Lab and our members, and published in Whitepaper: Deep-learning enhancement of large scale numerical simulations.

Support

As a user, you can always count on us for support. SURF provides support for the use of machine learning frameworks on the Snellius supercomputer. For example, we can help you with distributed training in the case of large models or datasets, or with consultation meetings to discuss the application of machine learning techniques to your scientific problem. Furthermore, we organize trainings together with member institutes.

Collaboration projects

We participate in a number of different projects and initiatives that aim to contribute at the intersection of machine learning and high-performance computing:

- European projects: EuroCC, ExaMode, ReaxPro

- National programs: EDL, eTEC-BIG, DL4HPC

- Collaborations with industry: Intel

We are open to discuss (future) project proposals where we could collaborate. Any inquiries can be submitted through our Service Desk.

Helpdesk

You can contact our helpdesk by telephone or email, but we can also assist you in person. If you have any questions, or want to report a problem, please raise a ticket to our helpdesk at https://servicedesk.surf.nl/ or phone +31-208001400. The helpdesk is available during office hours (9:00–17:00).

If you would like specific advice about large-scale distributed deep learning training or applying deep learning techniques to your scientific problem, please get in touch with us: