High-performance data processing

Processing and storing large datasets

Advantages

For large, structured datasets

Collaborate

Data storage

Do you have a question about high-performance Dataprocessing? Get in touch.

For large, structured datasets

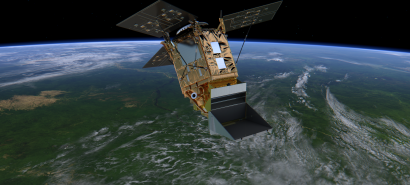

Our high-throughput data processing services are suitable for projects that require the processing of large, structured data sets. Such as instrument data from sensors, DNA sequencers, telescopes and satellites.

Use data processing services for:

- Collaborating on a shared set of data and software

- Parallel processing of large amounts of data, from many terabytes to petabytes

- Processing large independent simulations and workflows

- Optimised data transport thanks to a scalable high-bandwidth network

- Easy access to scalable data storage solutions

Nobel Prize-winning research into gravitational waves uses the Grid

Tailored support

High-performance data processing projects need support in transmitting, storing, accessing and processing large volumes of data. We have extensive experience in all aspects of large-scale data processing. We offer specialised support in building customised production solutions for your research community.

Data processing platforms

We have two powerful data processing platforms to cater for different needs:

Grid: for scalability and collaboration

Grid offers limitless computing potential thanks to a chain of international clusters connected by a high-speed network. These clusters are located in data centres around the world.

Benefits of Grid:

- Access to the European Grid for federated data processing

- Huge processing capacity focused on steady production processes

- Possibility to collaborate with large research communities in different locations

Spider: for more flexibility

Spider is a dynamic, flexible and customisable platform hosted locally at SURF. It is optimised for collaboration and supported by an ecosystem of tools that allow data-intensive projects to be launched quickly and easily.

Benefits of the Spider platform:

- Interactive processing with a user-friendly interface

- Private nodes and clusters for customised production and availability

- Collaboration in a private and secure project environment

Technical specs and user documentation

More (technical) information on using these platforms:

This service is ISO 27001-certified

This means we meet the high requirements of this international standard on information security.